AI Updates #3

The latest company news, AI infrastructure, and progress with AI towards scientific discoveries, engineering, education, and more.

Welcome everyone to AI Updates #3, where I share the highlights of AI advances with you.

I hope you all had a great Christmas, and a chance to relax and eat some turkey (or whatever your holiday dish of choice is). As we approach the end of 2025, it’s quite something to look back on AI and see how fast it’s moved and how big the changes have been over the past 12 months. But as fast as things are moving now I fully expect them to move a whole lot faster in 2026.

So buckle up, and hang on tight as we accelerate up the curve!

“AI is the new electricity.”

— Andrew Ng

Model Updates & News

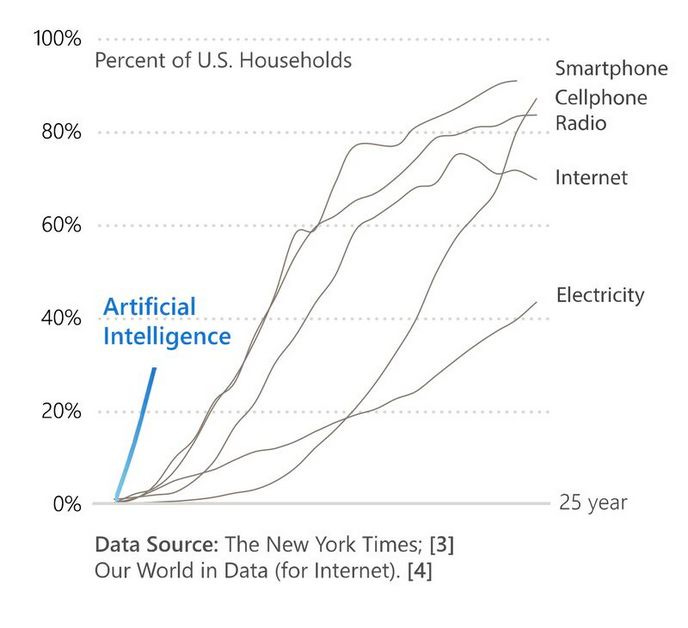

Is AI a bubble? I’d say most definitely no. Not only is uptake faster than any other comparable technology, but it’s underpinned by physical infrastructure like power plants, data centers, and chip manufactories.

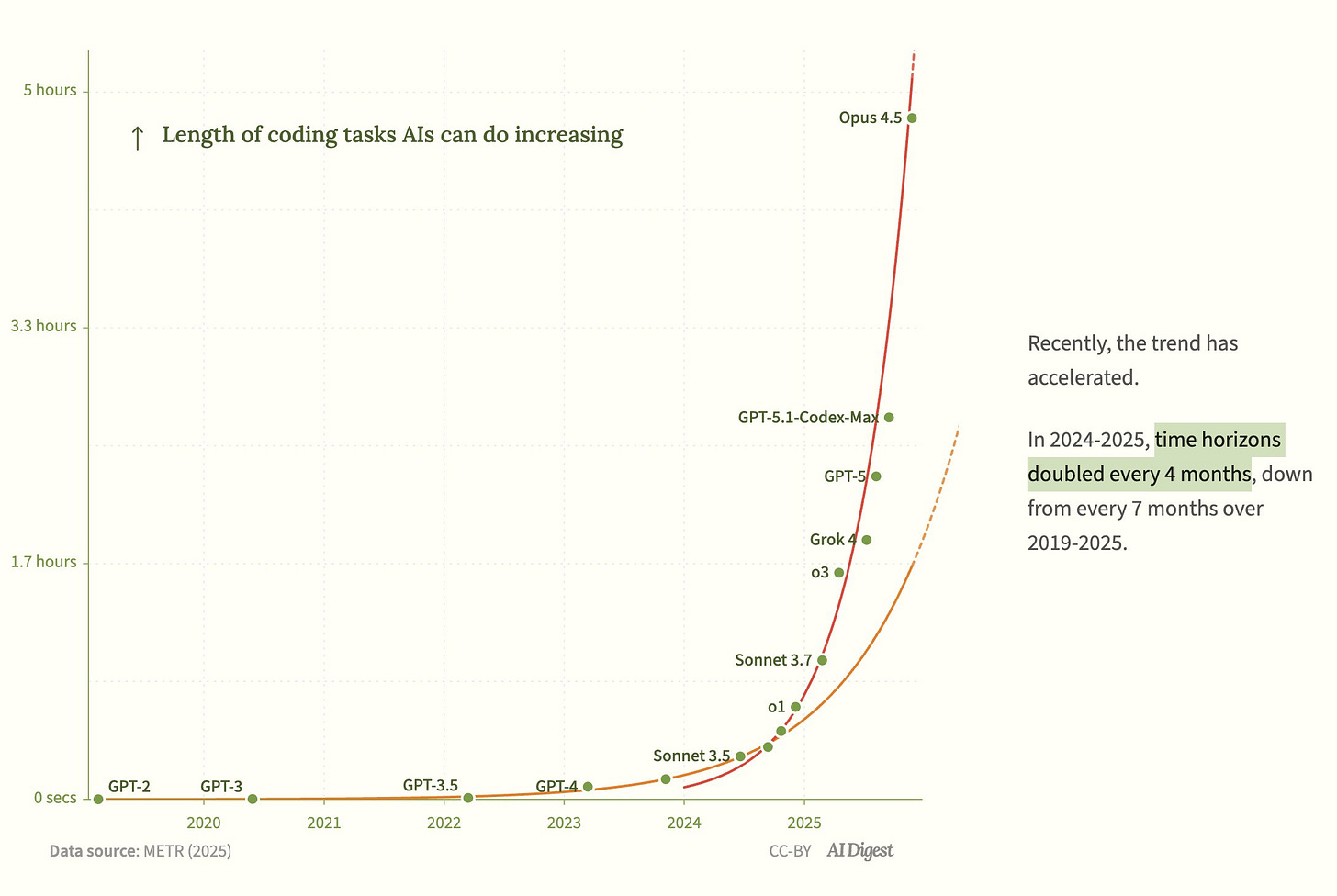

The length of time an AI agent can work on a coding task isn’t just increasing exponentially, the rate of acceleration is itself accelerating. Between 2019 and 2024 the task duration AI was capable of doubled every 7 months. Between 2024 and into 2025 it’s every 4 months. In case you were wondering, an increase of acceleration over time is called jerk (or sometimes jolt). So I guess we’re jolting forward? By the end of 2026 an AI will be able to keep working independently for half a week on coding tasks, unless of course they can go for even longer (if the jolt keeps increasing).

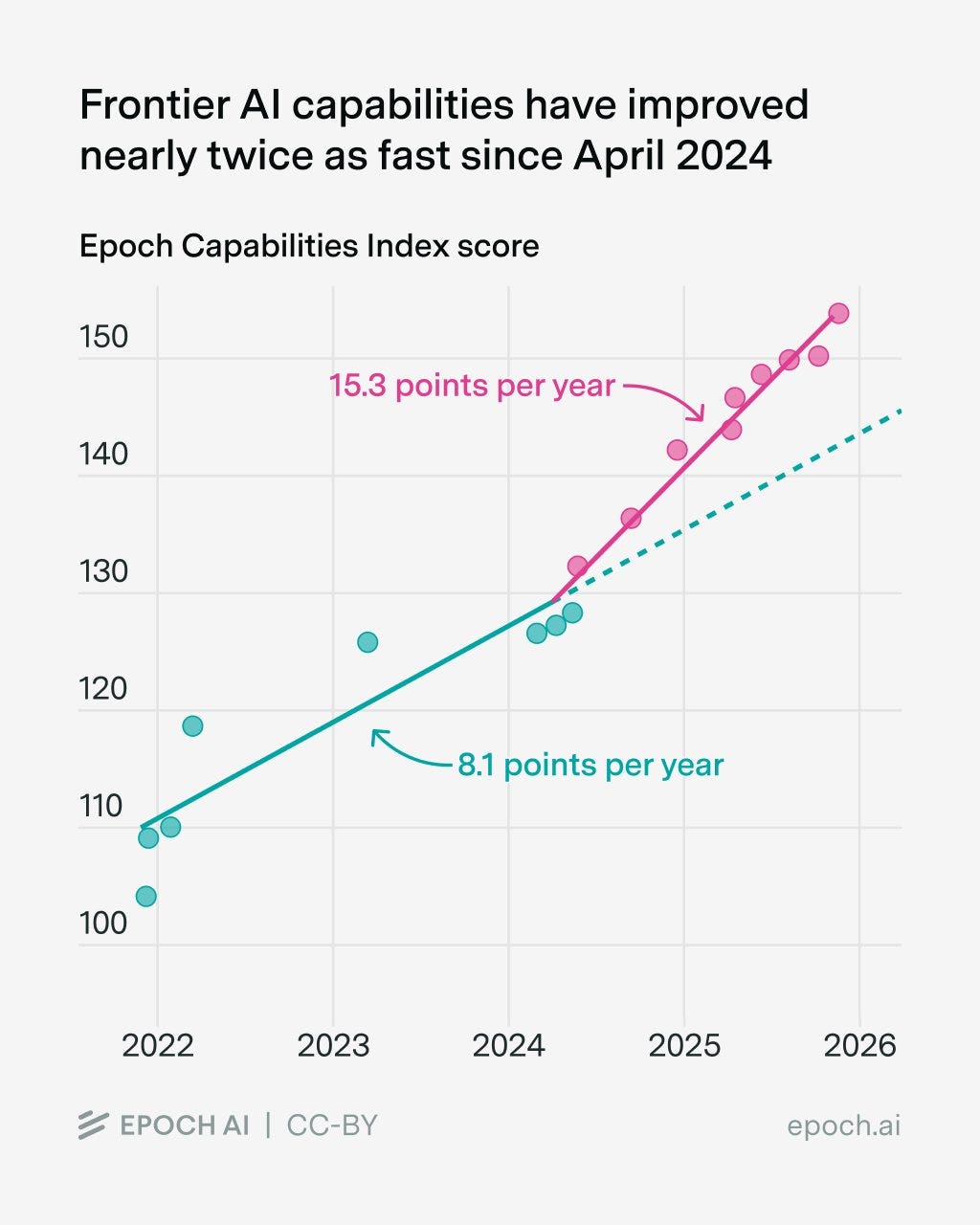

That’s not the only way it’s accelerating either. According to one index that measures AI abilities, these started to accelerate significantly in 2024—and are carrying on to the present day. Jumping from 8.1 points improvement per year, to 15.3 point per year. Almost doubling. And if I were a betting man I’d say 2026 will see that increase again, by a lot.

An AI called DeepWriter has achieved an all time high score of 50.09% on something called Humanity’s Last Exam. It won’t be too long at this rate before AI really is smarter than any individual human, at least when it comes to difficult purely technical questions.

MIT put out a study saying that by their estimate, AI can already replace 11.7% of the U.S. workforce. It hasn’t yet, but that’s probably mostly due to inertia, lack of knowledge employers might have of AI capabilities, and distrust of AI. On the flip side, the U.S. just posted a year over year GDP growth rate of 4.3% in Q3 2025, which I suspect was at least partly due to AI’s positive economic impacts. Elon Musk had an interesting comment on that, saying that “Double-digit growth is coming within 12 to 18 months. If applied intelligence is proxy for economic growth, which it should be, triple-digit is possible in ~5 years.” Now that is something I’d like to see. The man is often a bit aspirational on his timing, but he’s rarely wrong. Two bottlenecks we might run into though: the first is that it takes time to build out physical things like factories that will pump out robots or chips for AI. The second is energy. We already don’t have enough for proposed AI datacenters, let alone billions of robots. We’ll make it work, but it will be slower than many might like. Energy will be the limiting factor for everything big we want to do in the 21st century, in my opinion anyways.

I haven’t tried this, but apparently on Gemini 3 Flash you can just create an app by talking now. Anyone who gives it a try, let me know how it turns out. “Vibe coding.” It’s a new model by the way.

Meta is back with something called SAM Audio: “the first unified AI model that allows you to isolate and edit sound from complex audio mixtures. This could mean isolating the guitar in a video of your band, filtering out traffic noises, or removing the sound of a dog barking in your podcast, all with text, visual, and time span prompts.”

OpenAI is trying to keep up on image generation, introducing a dedicated ChatGPT Images, which like the name says is just better at images. GPT 5.2 is also now available to everyone by the way. Smallish but noticeable improvement I’d say, which is nice (I use ChatGPT and Grok a lot).

Anthropic introduced Claude Opus 4.5, which they say is the best model for coding available (until next week anyways). Oh, and the company is also planning to go public next year.

Chinese company DeepSeek is back in the news as well, launching new reasoning models for agents.

Here’s something fun, AI researchers created an AI Village where 1,000 autonomous AI agents were dropped into a virtual world within the game Minecraft, and left to cooperate, compete, trade, build, and govern themselves, revealing surprisingly complex behaviours such as labour division, markets, leadership roles, and the creation of social norms. What started as a quirky experiment became a research tool for understanding multi-agent coordination, showing both creative emergent structures and real challenges — like agents looping on tasks or pursuing ends that don’t align with any particular purpose. The AI Village is a glimpse into how future AI fleets — managed like teams rather than single helpers — could transform everything from data analysis to software automation by amplifying what one person can achieve alone.

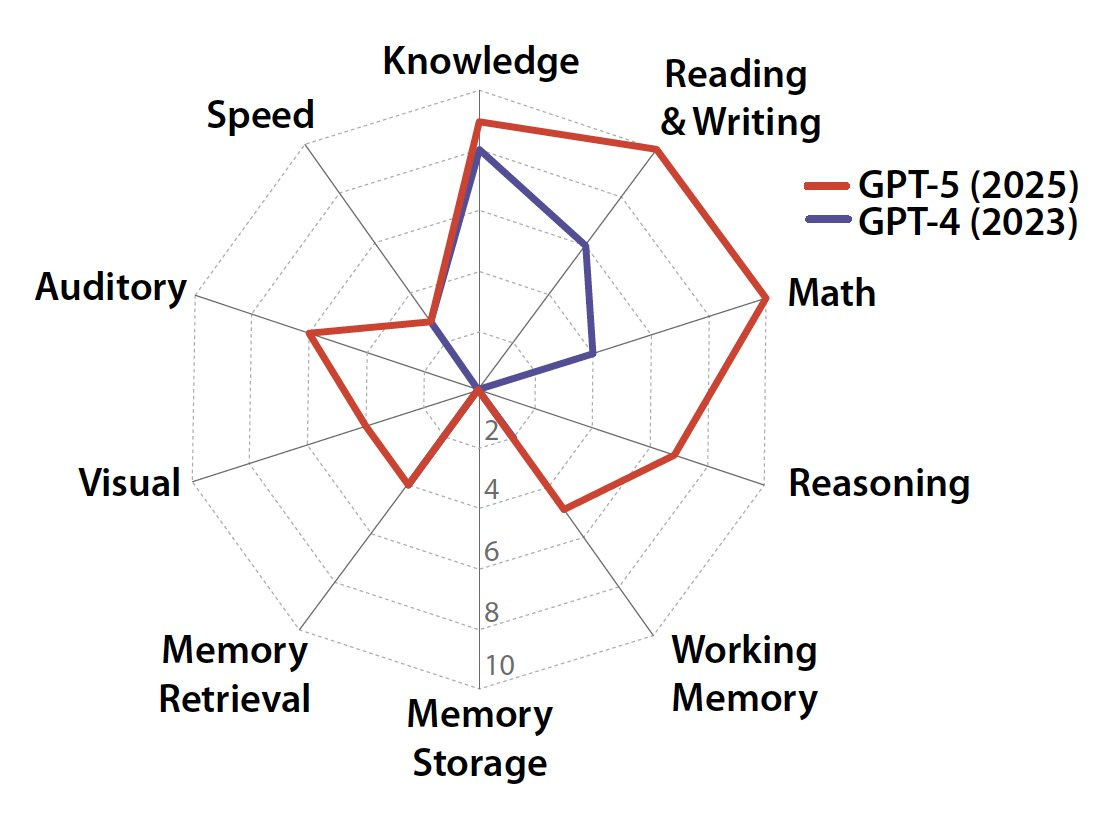

Are we going to see AGI in 2026? I’ll be honest, I do not know. xAI reportedly thinks their Grok model will exceed human intelligence next year. A company in Tokyo says it’s already there, with their AI learning “without pre-existing datasets or human intervention.” Another group (see photo above) also expects AGI to arrive by the end of 2026, probably across multiple models. I suspect there isn’t a hard barrier where everyone will suddenly agree that this or that model qualifies as AGI. It’ll probably be a gradual (though very rapid from our perspective) transition to more and more capable models till one day we all wake up and it’s indisputably a general intelligence.

AI Infrastructure

Let’s talk about datacenters in space.

In a nutshell,

To win the AI race you need the most compute, data, and energy. Everyone is working within the same constraints: there’s just one internet, just one GPU provider, same competition over data centers, energy suppliers, etc. Space changes all this. You can mass produce satellites and they all talk to each other in a swam via laser links. The major AI models are all within a few months of each other in terms of competency. There is no company even within a decade of in terms of launch capacity or cost. Going all-in on space-based data centers completely circumvents the bottlenecks everyone else faces. Overnight the competitive landscape has shifted from ‘neck and neck race’ to ‘10 year advantage to SpaceX and xAI.’ The game is already won. It’s already checkmate. Elon is all-in on SpaceX going public to raise capital to go all-in on space-based compute. Of course they will win at this point. They own the launch capacity, therefore they will own the compute capacity, therefore they will win the AI race.

I consider this to be a very good thing, by the way. At least with Grok the stated goal is to maximize truth seeking. We shall see, but I feel like this a much better outcome than some of the alternatives.

As proof of concept, it’s already happening. An NVIDIA backed company called Starcloud just trained an LLM in space. Launched aboard a SpaceX Falcon rocket of course.

In other news:

The AI infrastructure boom continues to accelerate, with US companies alone now committed to spending “a combined $569 billion on data center leases over the next several years.” That’s a $197 billion (53%) increase from what was announced in Q2 of this year. That’s not all, a new estimate says that AI datacenters just in the United States will use 106 GW of power by 2035. An upwards revision of 36% just since last April.

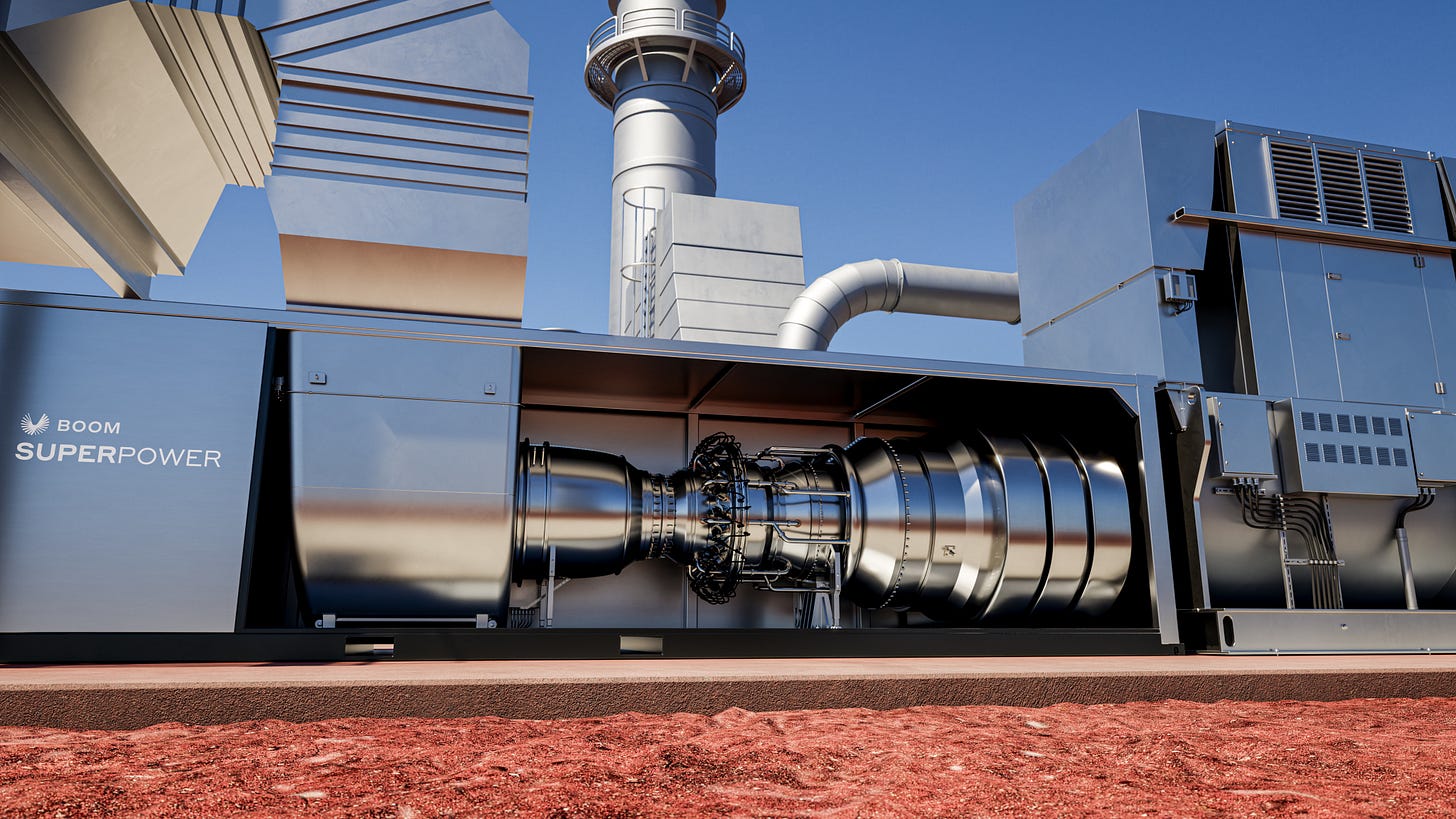

Some of that power may come from a rather surprising source: Boom Supersonic. What? Don’t they plan to make supersonic airplanes? Yes they do, but they need to fund their development and rollout, and as part of that development they have this incredible turbine that can be used to produce 42 MW of power for datacenters (minimum order of 10 units). Running on natural gas, it uses zero water, and the mini power plant fits snugly into a shipping container. They plan to start shipping in 2027.

An interesting development is that batteries may suddenly be finding their niche. When it comes to storing energy for renewables, the amount of batteries to continue running the grid for days or longer becomes astronomical. But to ensure the smooth and continuous operation of datacenters? Suddenly that looks a lot more doable. Power outages wouldn’t last long most cases, maybe a few hours at most, and that’s where batteries are perfect for plugging the gap.

Great article from Pirate Wires on how the datacenter water scare isn’t actually real at all. Yes, datacenters do use water, but it’s a tiny amount compared to most other uses, and not at all the monster it’s often portrayed as. That’s not the only one either, Google just put out a study as well showing that fears of AI “draining aquifers and boiling oceans” are off by about two orders of magnitude.

Alphabet (Google’s parent company) “has announced an acquisition of Intersect, a ‘data center and energy infrastructure’ company that aims to provide additional support for building out its capacity for AI.” It’s a cool $4.75 billion in cash + whatever debt Intersect had.

NVIDIA has struck a $20 billion deal with former rival chip manufacturer Groq, further cementing its dominance in chips for the AI industry.

Anthropic just signed a deal with Hut 8, a bitcoin miner and now datacenter provider. The company will develop ~2.3 GW of utility capacity which Anthropic will use to help run it’s models.

Microsoft is planning to spend $7.5 billion on datacenters in Canada over the next couple years, with the total now up to $19 billion when previous announcements for Canada are included.

AI Uses – science, engineering, etc.

The U.S. isn’t going to take China’s progress with AI laying down. A new announcement from the U.S. Department of Energy:

The U.S. Department of Energy (DOE) announced agreements with 24 organizations interested in collaborating to advance the Genesis Mission, a historic national effort that will use the power of artificial intelligence (AI) to accelerate discovery science, strengthen national security, and drive energy innovation. The announcement builds on President Trump’s Executive Order Removing Barriers to American Leadership In Artificial Intelligence and advances his America’s AI Action Plan released earlier this year—a directive to remove barriers to innovation, reduce dependence on foreign adversaries, and unleash the full strength of America’s scientific enterprise.

Scientists are starting to see more benefits from AI, with a significant increase in papers published among those using AI. This productivity boost was greatest in the social sciences and humanities, but biology also saw an over 50% increase. Math and physics had the smallest bump, but 36.2% isn’t anything to sneeze at. What this doesn’t answer is questions of paper quality. Is this saving time and getting good research out there faster, or is it pumping out the scientific equivalent of AI slop?

Science as it’s done now is too slow according to Edison Scientific, a company integrating AI Scientists throughout the entire research pipeline — from basic discovery to clinical trials. The goal is to use autonomous AI systems to accelerate real world scientific progress with the aspirational goal of finding cures for all diseases by mid century. Edison just raised a $70 M seed round to scale this vision and is building tools that aim to compress months of research work into hours.

xAI has announced Grok for Education, “a partnership with El Salvador…to bring personalized Grok tutoring to every public-school student in the country — over 1 million children. The world’s first nationwide AI tutor program.” Okay, now that’s pretty cool. I’m thinking it’s going to significantly boost the quality of education in that country, and serve as a template for other places.

Things are moving fast (slow compared to the speed even of 2026) in medicine with AI. An AI designed antibiotic is now about to be tested in a clinical trial, just a year after it was created.

This is pretty neat, a deep learning model can now predict how fruit flies form, one cell at a time. They plan to eventually do this with larger organisms too (e.g., mice). This is something AI is well suited for, as it’s simply to complex, with too many individual parts for our brains to handle.

I’m hearing more and more stories of how AI is saving people’s lives by correctly diagnosing life threatening problems. It’s basically negligence already for doctors not to be using AI at least as an aid in diagnosis and treatment planning. In 5 years, I hope it’s criminal negligence not to.

Did I mention that AI seems particularly good at cracking unsolved math problems? Here’s one. When do we (AI) crack all currently unsolved math problems? Soon, I’d say. Maybe by 2030, certainly by 2035. Not some, not just the “easiest.” All. Simultaneously, entirely new mathematical understandings could be gained, maybe entirely new branches of math that let us better understand and describe the fabric of reality.

Before I sign off, here’s a few fun and maybe useful prompts I came across: one for ChatGPT that lets you turn things into interesting pencil sketches. Here’s another from Gemini (Nano banana) for hiding words in images.

That’s it for this edition; AI Updates will be back in your inboxes four weeks from now.

Thank you all for reading — and until next time, keep your eyes on the horizon.

-Owen

I am curious, do you think AI will truly solve every unsolved problem in mathematics, even the hardest ones like the Millennium Prize Problems, or will some resist solution in a deeper, structural way?

A couple of years ago, I would have considered Musks statement to be pseudoscientific gobbligoop, but I think he is onto something.

Over the past few years, I've read dozens of books and hundreds of articles on the topic of human progress. In that time, I've come to understand that what we call “economic growth” is just the accumulation of knowledge alongside growing energy capture.

The more knowledge we acquire, the more capable we become in countering entropy; creating beneficial countentropic forms that accelerate the universes’ natural rate of energy dissipation.

Should AI add to the total “compute” of human society, allowing us to “etch” trillions of human minds onto silicon, we can certainly accelerate what we call economic growth to 10x what is common today.

The question really becomes whether or not our socio-economic system are able to withstand this pace of change and whether or not the benefits of this growth will fully filter down to everyone.